Filling In the Gaps

Aylin Woodward listens in as a neuroscientist studies our ability to hear and process language. Illustrated by Jacki Whisenant.

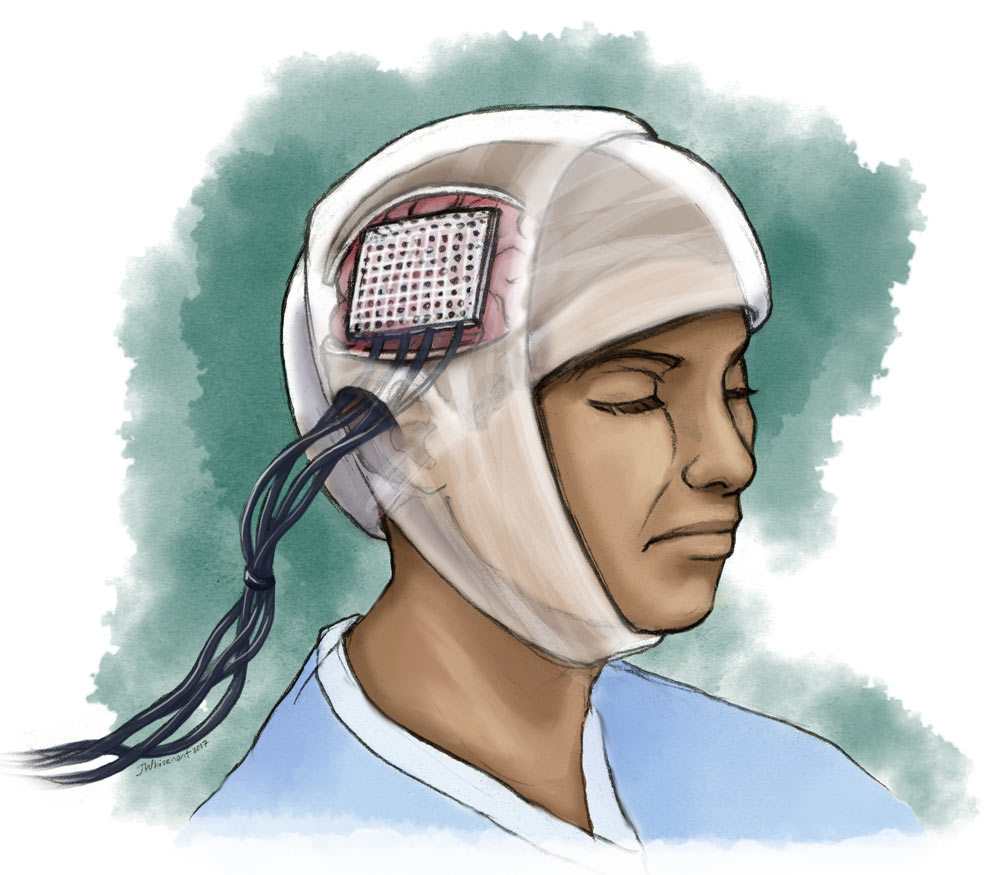

Illustration: Jacki Whisenant

A young man with epilepsy lay quietly on a bed in the neurosurgery ward at the UC San Francisco Parnassus Hospital, which crowns one of the tallest hills in the city. He listened intently for his instructions.

“I’m going to say banana, and all I want you to do is repeat that word, okay?” The researcher’s disembodied voice floated from behind a cart burdened with wires, amplifiers and a mammoth computer monitor in the corner of the room.

The voice belonged to neuroscientist Matt Leonard, who flipped on an amplifier and watched as a colorful array of squiggly lines bounced across the computer screen. Amidst the aqua blues, lime greens, and bright oranges that represented electrical activity in the boy’s brain, Leonard was looking for something. He hoped the rises and dips in the colored lines might help unlock the secrets behind our brains’ ability to perceive spoken language.

Understanding how the brain converts its mental representations of acoustic information—words, syllables, sounds—into language is one of the most important questions in cognitive neuroscience. Leonard’s latest work examines a process called “phoneme restoration,” when part of a word is completely replaced by noise, but listeners still report hearing the whole word.

Scientists are coalescing around theories of how the brain computes information.

Developments in brain imaging techniques since the 1980s have given scientists like Leonard further insights into the biological foundations of understanding speech. Together he and neurosurgeon Edward Chang, leader of the UCSF lab where Leonard works, are striving to understand not so much where language processing happens in the brain, but how the brain organizes what we hear into language.

Researchers already have localized much of language processing to an area of the brain called the perisylvian cortex. It sits above the ear, midway between the front, back and top of the head. Broca’s and Wernicke’s areas—two parts of the brain that have been linked to understanding and producing speech—are key hubs in this language network.

But it’s completely unknown how the neurons in these areas help the brain compute understandable words from sounds, Chang says.

“A lot of neuroscience is organized around where in the brain these functions are happening, but that doesn’t tell you how,” he says. “The challenge is understanding what actual computations take place to give rise to the human function of language.”

To answer this question, he and Leonard are studying a group of volunteers with epilepsy using a method called electrocorticography, or ECoG. These patients have part of their skull removed, allowing doctors to implant electrodes directly onto their brain. The electrodes pinpoint where the epileptic seizures happen, but for Leonard, there is a side benefit: He can monitor the information recorded by the electrodes, providing a glimpse of what’s happening in the brain in real time.

Graphic: Aylin Woodward. Artwork by Jacki Whisenant.

Alphabet soup

Chang and Leonard’s ECoG technique is one of the most advanced available to neuroscientists. Typically, researchers have used three other imaging methods to understand where and when things are happening in the brain.

The first, magnetoencephalography (MEG), involves placing subjects in “what looks like a giant hair dryer,” says Leonard, so scientists can record tiny magnetic fields produced by regions of the brain. The second, electroencephalography (EEG), involves monitoring the brain’s electrical activity using metal electrodes attached to the scalp. However, these two methods are less than ideal. MEG is one step removed from neural activity, and EEG attempts to record brain activity through layers of skin and other soft tissue. In both cases, the map of what the brain is doing isn’t as clear as it could be. Neither technique can get down to the level of neurons.

The third method, functional magnetic resonance imaging (fMRI), doesn’t even look at electrical activity. Rather, it gauges the amount of oxygen in the blood flowing through the brain, which reflects neural activity, researchers believe. Many neuroscientists, including Matthew Davis from the University of Cambridge in England, like to rely on fMRI studies: “fMRI records the whole brain simultaneously,” he says. “It’s the best non-invasive method we have.”

But the reality is that blood flows more sluggishly than speech. fMRI operates on a second-to-second scale, while speech actions happen at a time scale more than 100 times faster. So fMRI is not as agile at detecting the brain’s activities when it processes speech. Ben Dichter, a graduate student in Chang’s lab, likens fMRI to “putting a microphone at an intersection and trying to understand what’s going on by the amount of noise.”

After finishing his Ph.D. at UC San Diego, Leonard found that the question he was interested in—how the brain processes and understands language—was not easily addressed by these three options.

“I really wanted a method that would help me get away from the question of where in the brain things are happening, and instead would address what is happening,” Leonard says. So he joined the Chang lab, a group known for its work with epilepsy patients and ECoG.

ECoG is the only method that can track what’s happening in the brain during speech processing at the required speed, while simultaneously recording from specific patches of brain tissue. It enables Leonard to examine repetitive neural activity occurring in the high frequency range—roughly between 60 and 200 cycles per second—where we hear speech sounds.

Leonard compares what this activity looks like when the patient hears different words, allowing him to see which clusters of neurons near the perisylvian cortex area are triggered, and when. “ECoG is valuable because it works fast enough [to] see the brain’s activity when the patient hears one word, in one instance,” he says.

Neuroscientist Adeen Flinker, head of the ECoG lab at the New York University School of Medicine, wasn’t surprised by Leonard’s move to UCSF. “The Chang lab is doing forefront work,” Flinker says.

In search of ECoG

Leonard’s eyes glint through the lenses of his snappy black Ray-Bans as he explains how difficult it is to collect this rare ECoG data—and how spoiled he feels to have found a place in Chang’s lab.

“I have friends who have gone off and started their own ECoG labs,” says Leonard, age 36. “They may get 10 minutes of data every three months.” In contrast, Leonard works with a new patient every two weeks, and he gets to spend a couple of hours every day with each one.

But despite its advantages, the ECoG method isn’t easy to stomach.

“ECoG is the ultimate invasive technique,” says David Poeppel, a neuroscientist at NYU. “And it is the only invasive brain mapping procedure done on humans.”

The method requires researchers to tunnel wires through the scalp and connect them to hundreds of tightly packed electrodes on the patient’s exposed brain. An enormous amplifier displays the electrical activity in a manner that Leonard and his team can understand and analyze.

ECoG patients are as unique as the recordings pulled from their brains. “No human test subject in their right mind would volunteer for this procedure,” says Leonard. The electrode implant surgery and the post-operational period are boring and painful. The patients lie immobilized in their hospital beds with heavily bandaged heads. Many are in too much pain from the temporary removal of part of their skull to even watch television. But for some people suffering from seemingly incurable epilepsy, it becomes a necessity.

Chang suggests the invasive procedure as a “last-ditch resort,” in the hopes of discovering where a patient’s epileptic seizures might start in the brain.

These patients undergo surgery, in which Chang embeds an electrode array onto the brain tissue, and then must wait in post-op for what might be weeks until they have a seizure. Data from the electrodes helps doctors localize the seizure when it happens. Then, once Chang knows which part of the brain is seizing, he can remove it surgically.

While the patients wait after surgery, Leonard also monitors their brain activity as puts them through the paces of various speech tasks.

Leonard says it’s rare for a patient to not consent to this research. One patient, the banana-repeating young man, was so excited to make the most of this horrible situation that he helped Leonard design an experiment before going into surgery.

Filling in the gaps

Leonard admits his academic career is fortuitously timed. A few hospitals with epilepsy monitoring units had conducted EcoG procedures, including UCSF and New York University. But controlled research using the technique has exploded only in the last few years.

That research is now paying off. In a December 2016 study published in Nature Communications, Leonard and his colleagues reported that data from epilepsy patients shed light on the mechanism behind the brain’s ability to fill in inaudible sections of speech—the phenomenon called phoneme restoration. They tested whether their five subjects heard words such as “faster” or “factor,” or “novel” or “nozzle,” when the middle phoneme—a unit of sound that helps us distinguish between words—was masked by noise.

The work is aimed at helping neuroscientists understand how the brain compensates when words are obscured by other sounds—for instance, when people are chatting in a crowded bar or walking down a traffic-filled street. The team found that the brain responded to missing speech sounds as if those sounds were actually present. For instance, patients reported hearing “faster” despite hearing “fa–sound–ter.”

In this podcast, Aylin Woodward listens in as a neuroscientist studies humans’ ability to hear and process language. Illustration by Jacki Whisenant.

More surprisingly, Leonard discovered that a region of the brain physically separate from the speech-processing area actively predicted which word a listener would hear, three-tenths of a second before the brain’s auditory cortex began to process the noise.

“This teaches us that the sounds that enter our ear are only part of what we hear,” says Chang, one of the study’s coauthors. “A lot of other things—like what we expect to hear and what we pay attention to—all play a role in terms of what we actually hear.”

To Leonard, this discovery is evidence that the brain makes predictions, perhaps based on which of the two possible words the brain heard more recently. These predictions influence what word, precisely, the brain thinks it hears.

Leonard also found this word prediction process wasn’t influenced by the context of a sentence. For instance, even if a patient was primed to hear the word “faster”—as in “I drove my car fa—ter”—she was just as likely to hear the word “factor.”

How the brain compensates for missing acoustic information in context is just one avenue that scientists can use to explore how the brain processes speech. To Chang, understanding this process is critical. “If we knew how it worked, then we would be able to rehabilitate those language circuits when they’re injured by a stroke, or a disease like Alzheimer’s,” he says.

Arthur Samuel, a psycholinguist from Stony Brook University who was one of the first to describe the restoration effect, says the Chang lab is leading the way: “The use of ECoG allows us to take an important step forward by providing otherwise unavailable detail about the regions of the brain that are computing auditory information.”

From process to prosthetic

On a Wednesday in February, the Chang lab is chock-full of eight researchers sitting in front of monitors. Their eyes rove over the computer code necessary to analyze the latest ECoG data; some of them nod to sounds coming through their bulky Bose headphones. Examining and processing the data are time-consuming tasks. The phoneme restoration study took 28 months to complete.

But Leonard is confident the lab’s persistence will pay practical dividends by helping people who can’t communicate. The clearest application, both he and graduate student Ben Dichter say, is the potential to create a speech prosthetic—a device that could record neural activity and know what a person is trying to say without the need for her to vocalize it.

Though the team is a long way off from that goal, UCSF’s work may help bridge the gap between two factions of neuroscientists: the animal researchers and the human researchers.

Traditionally, neuroscientists have thought that they must study humans to understand language, as animals do not speak. However, working with humans is a tricky business—you can’t genetically manipulate your test subjects or sacrifice them to look at recently dead brain tissue under a microscope.

Scientists have studied how brains process sound in non-human species, including mice, rats, macaques, and even ferrets. But it’s a tall order to connect these experiments—which tend to examine the behavior of single neurons and don’t look at language specifically—to larger-scale studies done on 10,000 neurons in the human brain.

ECoG may offer a solution. The electrode arrays can cover large areas of the brain, while also allowing scientists like Leonard to examine the neural activity happening at each of hundreds of individual electrodes. “Having that intermediate step is very meaningful,” says Lori Holt, a psychologist at Carnegie Mellon University in Pittsburgh.

A decade ago, when Leonard first started graduate school, ECoG wasn’t even on his methodological radar, let alone facilitating breakthroughs like the one in his most recent study. He thinks the field of neurolinguistics will make similar strides in the coming decade. “I think we’re at an inflection point,” he says. “The pace of discovery has really picked up, and scientists are coalescing around theories of how the brain computes information.”

Leonard’s next project may occupy him for quite some time. He is leading a language training program for the Defense Advanced Research Projects Agency (DARPA), managing a team that hopes to enhance our ability to learn a new language by stimulating a few key nerves in the body.

But Leonard’s ECoG work will also continue. He is joined from time to time by a new researcher—the same epilepsy patient who helped design some of Leonard’s experiments. On an afternoon in late February, Leonard looks forward to chatting with his patient-turned-researcher about a paper the two wrote together and hope to publish this summer.

A year and a half after a successful surgery, the young man is now seizure-free. He has come back twice to volunteer in the UCSF lab to complete his high school senior-year project on ECoG. He plans to study neuroscience in college, inspired by the strange experience of analyzing data from his own brain.

© 2017 Aylin Woodward / UC Santa Cruz Science Communication Program

Aylin Woodward

Author

B.A. (biological anthropology) Dartmouth College

Internship: New Scientist (Boston)

My mother was always reluctant to take me to the zoo. I’d spend hours watching the silverbacks knuckle-walk back and forth like errant pendulums. That fascination for primates (Homo sapiens included) and their locomotor preferences followed me to adulthood. It ignited a fierce curiosity about why we walk the way we do, and why that matters.

Yet I also loved sparking debates, and my political science interests pulled me into the fraught world of social commentary. Could I spend the rest of my career begging chimpanzee calcanei to reveal their secrets?

Adrift in the sea of indecision, I spent a year helping Dartmouth’s president articulate his passions and priorities. Writing became the most powerful tool in my arsenal. Now, I want to harness that medium to tell the stories behind our evolution as a species.

Jacki Whisenant

Illustrator

B.F.A. (art and music performance) University of Wisconsin-Madison

Internship: Zoological Museum and Insect Research Collection, UW-Madison

Jacki Whisenant is an illustrator and musician with a fondness for bats, beetles, and unsung ecological heroes that might otherwise be overlooked. An early fascination with bats sparked an interest in unusual animals and plants, and her family’s love of books led to many trips to the library for volumes on the natural world. After graduating with a degree in the arts, she took several years of additional classes in the sciences. Working at the UW-Madison Zoology Museum was a pivotal experience that sharpened her interest in natural history.

She embraces the opportunity to explore new facets of study, particularly entomology and comparative anatomy. Illustrating notes while studying is a tremendous aid to memorization, linking visual information with terms and processes to facilitate learning. As an artist and educator, she is inspired to use skills gained in this course of study to further science education and enthusiasm.